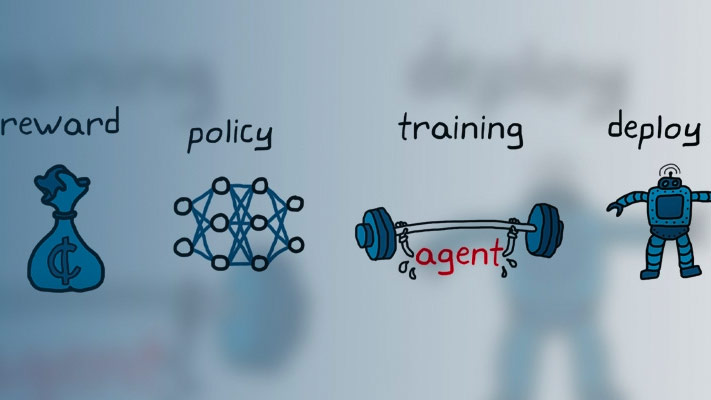

Design and Train Agent Using Reinforcement Learning Designer

This example shows how to design and train a DQN agent for an environment with a discrete action space usingReinforcement Learning Designer.

Open the Reinforcement Learning Designer App

Open theReinforcement Learning Designer应用程序。

reinforcementLearningDesigner

Initially, no agents or environments are loaded in the app.

Import Cart-Pole Environment

When using theReinforcement Learning Designer, you can import an environment from the MATLAB®工作区或创建a predefined environment. For more information, seeCreate MATLAB Environments for Reinforcement Learning DesignerandCreate Simulink Environments for Reinforcement Learning Designer.

For this example, use the predefined discrete cart-pole MATLAB environment. To import this environment, on theReinforcement Learningtab, in theEnvironmentssection, selectNew > Discrete Cart-Pole.

In theEnvironmentspane, the app adds the importedDiscrete CartPoleenvironment. To rename the environment, click the environment text. You can also import multiple environments in the session.

To view the dimensions of the observation and action space, click the environment text. The app shows the dimensions in thePreviewpane.

![The Preview pane shows the dimensions of the state and action spaces being [4 1] and [1 1], respectively](http://www.tianjin-qmedu.com/fr/fr/help/reinforcement-learning/ug/app_dqn_cartpole_03b.png)

This environment has a continuous four-dimensional observation space (the positions and velocities of both the cart and pole) and a discrete one-dimensional action space consisting of two possible forces, –10N or 10N. This environment is used in theTrain DQN Agent to Balance Cart-Pole Systemexample. For more information on predefined control system environments, seeLoad Predefined Control System Environments.

Create DQN Agent for Imported Environment

To create an agent, on theReinforcement Learningtab, in theAgentsection, clickNew. In the Create agent dialog box, specify the agent name, the environment, and the training algorithm. The default agent configuration uses the imported environment and the DQN algorithm. For this example, change the number of hidden units from 256 to 24. For more information on creating agents, seeCreate Agents Using Reinforcement Learning Designer.

ClickOK.

The app adds the new agent to theAgentspane and opens a correspondingAgent_1document.

简要总结DQN剂的特性和view the observation and action specifications for the agent, clickOverview.

When you create a DQN agent inReinforcement Learning Designer, the agent uses a default deep neural network structure for its critic. To view the critic network, on theDQN Agenttab, clickView Critic Model.

TheDeep Learning Network Analyzeropens and displays the critic structure.

Close theDeep Learning Network Analyzer.

Train Agent

To train your agent, on theTraintab, first specify options for training the agent. For information on specifying training options, seeSpecify Simulation Options in Reinforcement Learning Designer.

For this example, specify the maximum number of training episodes by settingMax Episodesto1000. For the other training options, use their default values. The default criteria for stopping is when the average number of steps per episode (over the last5episodes) is greater than500.

To start training, clickTrain.

During training, the app opens theTraining Sessiontab and displays the training progress in theTraining Resultsdocument.

Here, the training stops when the average number of steps per episode is 500. Clear theShow Episode Q0option to visualize better the episode and average rewards.

To accept the training results, on theTraining Sessiontab, clickAccept. In theAgentspane, the app adds the trained agent,agent1_Trained.

Simulate Agent and Inspect Simulation Results

To simulate the trained agent, on theSimulatetab, first selectagent1_Trainedin theAgentdrop-down list, then configure the simulation options. For this example, use the default number of episodes (10)和最大集长度(500). For more information on specifying simulation options, seeSpecify Training Options in Reinforcement Learning Designer.

To simulate the agent, clickSimulate.

The app opens theSimulation Sessiontab. After the simulation is completed, theSimulation Resultsdocument shows the reward for each episode as well as the reward mean and standard deviation.

To analyze the simulation results, clickInspect Simulation Data.

In theSimulation Data Inspectoryou can view the saved signals for each simulation episode. For more information, seeSimulation Data Inspector(Simulink).

The following image shows the first and third states of the cart-pole system (cart position and pole angle) for the sixth simulation episode. The agent is able to successfully balance the pole for 500 steps, even though the cart position undergoes moderate swings. You can modify some DQN agent options such asBatchSizeandTargetUpdateFrequencyto promote faster and more robust learning. For more information, seeTrain DQN Agent to Balance Cart-Pole System.

Close theSimulation Data Inspector.

To accept the simulation results, on theSimulation Sessiontab, clickAccept.

In theResultspane, the app adds the simulation results structure,experience1.

Export Agent and Save Session

To export the trained agent to the MATLAB workspace for additional simulation, on theReinforcement Learningtab, underExport, select the trained agent.

To save the app session, on theReinforcement Learningtab, clickSave Session. In the future, to resume your work where you left off, you can open the session inReinforcement Learning Designer.

Simulate Agent at the Command Line

To simulate the agent at the MATLAB command line, first load the cart-pole environment.

env = rlPredefinedEnv("CartPole-Discrete");

The cart-pole environment has an environment visualizer that allows you to see how the system behaves during simulation and training.

Plot the environment and perform a simulation using the trained agent that you previously exported from the app.

plot(env) xpr2 = sim(env,agent1_Trained);

During the simulation, the visualizer shows the movement of the cart and pole. The trained agent is able to stabilize the system.

Finally, display the cumulative reward for the simulation.

sum(xpr2.Reward)

env = 500

As expected, the reward is to 500.

See Also

Reinforcement Learning Designer|analyzeNetwork