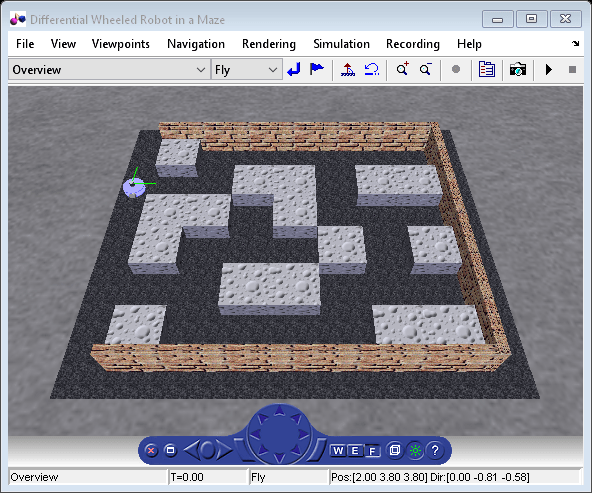

Differential Wheeled Robot in a Maze

The vrmaze example shows how the collision detection in Simulink® 3D Animation™ can be used to simulate a differential wheeled robot solving the maze challenge. The robot control algorithm uses information from virtual ultrasonic sensors that sense distance to surrounding objects.

A simple differential wheeled robot is equipped with 2 virtual ultrasonic sensors, one of the sensors looks ahead, one is directed to the left of the robot. Sensors are simplified, their active range is represented by green lines.

The sensors are implemented as X3D LinePickSensor nodes. These sensors detect approximate collisions of rays (modeled as IndexedLineSet) with arbitrary geometries in the scene. In case of geometric primitives, exact collisions are detected. One of the LinePickSensor output fields is the isActive field, which becomes TRUE as soon as the collision between its ray and surrounding scene objects is detected.

When activated, the sensor lines change their color from green to red using the script written directly in the virtual world.

In the model, there are both VR Sink and VR Source blocks defined, associated with the same virtual scene. VR Source is used to read the sensors isActive signals. VR Sink is used to set the Robot position / rotation in the virtual world.

Robot control algorithm is implemented using a Stateflow® chart.

In the virtual world, there are several viewpoints defined. Change to the "Follow Robot" viewpoint to see the scene from a different perspective.