fsulaplacian

Rank features for unsupervised learning using Laplacian scores

Description

IDX= fsulaplacian(X)Xusing theLaplacian scores。The function returnsIDX, which contains the indices of features ordered by feature importance. You can useIDX为无监督学习选择重要功能。

Examples

Rank Features by Importance

加载样本数据。

loadionosphere

Rank the features based on importance.

[idx,scores] = fsulaplacian(X);

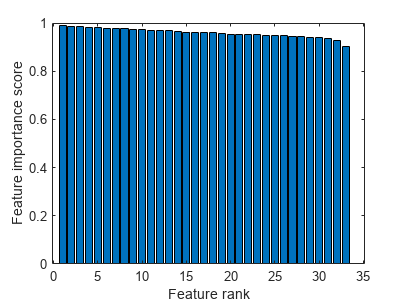

Create a bar plot of the feature importance scores.

bar(scores(idx)) xlabel(“功能等级”)ylabel(“功能重要性得分”)

选择the top five most important features. Find the columns of these features inX。

IDX(1:5)

ans =1×515 13 17 21 19

第15列X是个most important feature.

使用指定相似性矩阵的等级功能

计算Fisher的Iris数据集中的相似性矩阵,并使用相似性矩阵对特征进行排名。

Load Fisher's iris data set.

loadfisheriris

Find the distance between each pair of observations inmeasby using thepdist和squareformfunctions with the default Euclidean distance metric.

D = pdist(meas); Z = squareform(D);

Construct the similarity matrix and confirm that it is symmetric.

S = exp(-Z.^2); issymmetric(S)

ans =logical1

排名功能。

idx =fsulaplacian(meas,'Similarity',S)

idx =1×43 4 1 2

Ranking using the similarity matrixS是个same as ranking by specifying'NumNeighbors'as尺寸(MES,1)。

IDX2 = fsulaplacian(meas,'NumNeighbors',尺寸(MES,1))

IDX2 =1×43 4 1 2

Input Arguments

X—Input data

numeric matrix

Input data, specified as ann-by-pnumeric matrix. The rows ofXcorrespond to observations (or points), and the columns correspond to features.

The software treatsNaNs inXas missing data and ignores any row ofX至少包含一个NaN。

Data Types:单身的|double

姓名-Value Pair Arguments

Specify optional comma-separated pairs of姓名,Valuearguments.姓名是个argument name and价值是相应的值。姓名must appear inside quotes. You can specify several name and value pair arguments in any order as姓名1,Value1,...,NameN,ValueN。

“ numneighbors”,10,'kernelscale','auto'specifies the number of nearest neighbors as 10 and the kernel scale factor as'auto'。

'Similarity'—Similarity matrix

[](empty matrix)(默认)|symmetric matrix

Similarity matrix, specified as the comma-separated pair consisting of'Similarity'和ann-by-nsymmetric matrix, wheren是观察的数量。相似性矩阵(或邻接矩阵)通过对数据点之间的本地邻域关系进行建模来表示输入数据。相似性矩阵中的值表示连接在A中的节点(数据点)之间的边缘(或连接)相似性图。有关更多信息,请参阅Similarity Matrix。

如果指定the'Similarity'value, then you cannot specify any other name-value pair argument. If you do not specify the'Similarity'值,然后该软件使用其他名称值对参数指定的选项计算相似性矩阵。

Data Types:单身的|double

'距离'—区ance metric

character vector|字符串标量|function handle

距离度量,指定为逗号分隔对'距离'以及该表中所述的字符向量,字符串标量或函数句柄。

| 价值 | Description |

|---|---|

'euclidean' |

欧几里得距离(默认) |

'seuclidean' |

|

'mahalanobis' |

Mahalanobis distance using the sample covariance of |

'城市街区' |

City block distance |

'minkowski' |

Minkowski距离。默认指数为2。使用 |

'chebychev' |

Chebychev distance (maximum coordinate difference) |

'cosine' |

1 -之间的夹角的余弦值observations (treated as vectors) |

'correlation' |

One minus the sample correlation between observations (treated as sequences of values) |

'hamming' |

锤距,这是不同的坐标百分比 |

'jaccard' |

一个减去jaccard系数,这是非零坐标的百分比 |

'spearman' |

一个减去样本长矛猎手在观测值之间的等级相关性(被视为值序列) |

@ |

Custom distance function handle. A distance function has the form functionD2 = distfun(ZI,ZJ)% calculation of distance...

如果您的数据不是稀疏的,则通常可以使用内置距离而不是功能手柄来更快地计算距离。 |

有关更多信息,请参阅区ance Metrics。

When you use the'seuclidean','minkowski', 或者'mahalanobis'距离度量,您可以指定其他名称值对参数'Scale','P', 或者'Cov', respectively, to control the distance metrics.

Example:“距离”,“ Minkowski”,'P',3specifies to use the Minkowski distance metric with an exponent of3。

'P'—Exponent for Minkowski distance metric

2(默认)|positive scalar

Exponent for the Minkowski distance metric, specified as the comma-separated pair consisting of'P'和a positive scalar.

This argument is valid only if'距离'is'minkowski'。

Example:'P',3

Data Types:单身的|double

'Cov'—Mahalanobis距离度量标准的协方差矩阵

cov(X,'omitrows')(默认)|正定矩阵

Covariance matrix for the Mahalanobis distance metric, specified as the comma-separated pair consisting of'Cov'和一个积极的确定矩阵。

This argument is valid only if'距离'is'mahalanobis'。

Example:'Cov',eye(4)

Data Types:单身的|double

'Scale'—Scaling factors for standardized Euclidean distance metric

性病(X, omitnan)(默认)|numeric vector of nonnegative values

Scaling factors for the standardized Euclidean distance metric, specified as the comma-separated pair consisting of'Scale'和非负值的数字向量。

规模has lengthp(在X),因为每个维度(列)的Xhas a corresponding value in规模。For each dimension ofX,fsulaplacian在规模to standardize the difference between observations.

This argument is valid only if'距离'is'seuclidean'。

Data Types:单身的|double

'NumNeighbors'—Number of nearest neighbors

log(size(X,1))(默认)|正整数

Number of nearest neighbors used to construct the similarity graph, specified as the comma-separated pair consisting of'NumNeighbors'和a positive integer.

Example:'NumNeighbors',10

Data Types:单身的|double

'KernelScale'—规模factor

1(默认)|'auto'|positive scalar

规模factor for the kernel, specified as the comma-separated pair consisting of'KernelScale'和'auto'或积极标量。该软件使用比例因子将距离转换为相似性度量。有关更多信息,请参阅相似性图。

The

'auto'仅支持选项万博1manbetx'euclidean'和'seuclidean'distance metrics.如果指定

'auto', then the software selects an appropriate scale factor using a heuristic procedure. This heuristic procedure uses subsampling, so estimates can vary from one call to another. To reproduce results, set a random number seed usingRNGbefore callingfsulaplacian。

Example:'KernelScale','auto'

Output Arguments

More About

相似性图

相似图模拟了数据点之间的本地邻域关系Xas an undirected graph. The nodes in the graph represent data points, and the edges, which are directionless, represent the connections between the data points.

If the pairwise distance区i,jbetween any two nodesi和jis positive (or larger than a certain threshold), then the similarity graph connects the two nodes using an edge[2]。两个节点之间的边缘通过成对相似性加权Si,j, where , for a specified kernel scaleσ价值。

fsulaplacianconstructs a similarity graph using the nearest neighbor method. The function connects points inXthat are nearest neighbors. Use'NumNeighbors'to specify the number of nearest neighbors.

Similarity Matrix

A similarity matrix is a matrix representation of a相似性图。Then-by-nmatrix contains pairwise similarity values between connected nodes in the similarity graph. The similarity matrix of a graph is also called an adjacency matrix.

The similarity matrix is symmetric because the edges of the similarity graph are directionless. A value ofSi,j= 0means that nodesi和j相似性图未连接。

度矩阵

A degree matrixDgis ann-by-n通过求和的对角矩阵总和相似性矩阵S。That is, thei对角元素Dgis

Laplacian Matrix

拉普拉斯矩阵,这是代表一个相似性图,定义是不同的tween thedegree matrixDg和相似性矩阵S。

Algorithms

Laplacian Score

Thefsulaplacianfunction ranks features using Laplacian scores[1]obtained from a nearest neighbor相似性图。

fsulaplacian计算值分数as follows:

For each data point in

X,使用最近的邻居方法定义当地邻域,并找到成对距离 for all pointsi和jin the neighborhood.Convert the distances to the相似性矩阵Susing the kernel transformation , whereσ是个scale factor for the kernel as specified by the

'KernelScale'name-value pair argument.Center each feature by removing its mean.

在哪里xr是个rth feature,Dg是个degree matrix, and 。

Compute the scoresrfor each feature.

Note that[1]defines the Laplacian score as

在哪里L是个拉普拉斯矩阵, defined as the difference betweenDg和S。Thefsulaplacianfunction uses only the second term of this equation for the score value of分数so that a large score value indicates an important feature.

使用Laplacian分数选择功能与最小化值一致

在哪里xir代表ith observation of ther特征。最小化此值意味着该算法更喜欢具有较大差异的特征。同样,该算法假设重要特征的两个数据点在两个数据点之间具有边缘时,并且仅当相似性图具有边缘。

References

[1] He,X.,D。Cai和P. Niyogi。“特征选择的Laplacian得分。”NIPS Proceedings.2005.

[2] Von Luxburg, U. “A Tutorial on Spectral Clustering.”统计和计算杂志。Vol.17, Number 4, 2007, pp. 395–416.

MATLAB 명령

다음 MATLAB 명령에 해당하는 링크를 클릭했습니다.

명령을 실행하려면 MATLAB 명령 창에 입력하십시오. 웹 브라우저는 MATLAB 명령을 지원하지 않습니다.

You can also select a web site from the following list:

如何获得最佳网站性能

选择the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina(Español)

- Canada(English)

- United States(English)