Train a nonlinear autoregressive with external input (NARX) neural network and predict on new time series data. Predicting a sequence of values in a time series is also known as多步骤预测. Closed-loop networks can perform multistep predictions. When external feedback is missing, closed-loop networks can continue to predict by using internal feedback. In NARX prediction, the future values of a time series are predicted from past values of that series, the feedback input, and an external time series.

Load the simple time series prediction data.

Partition the data into training dataXTrain和TTrain, and data for predictionXPredict. UseXPredict创建闭环网络后执行预测。

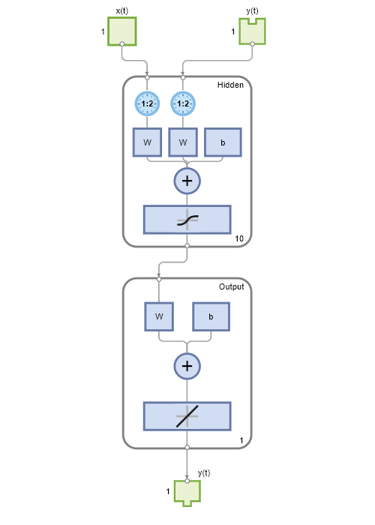

创建一个Narx网络。定义隐藏图层的输入延迟,反馈延迟和大小。

使用时间序列数据使用preparets. This function automatically shifts input and target time series by the number of steps needed to fill the initial input and layer delay states.

推荐的做法是将网络完全创建开放循环,然后将网络转换为封闭的循环以进行多步骤预测。然后,闭环网络可以预测您想要的尽可能多的未来值。如果您仅以闭环模式模拟神经网络,则网络可以执行与输入系列中的时间步长一样多的预测。

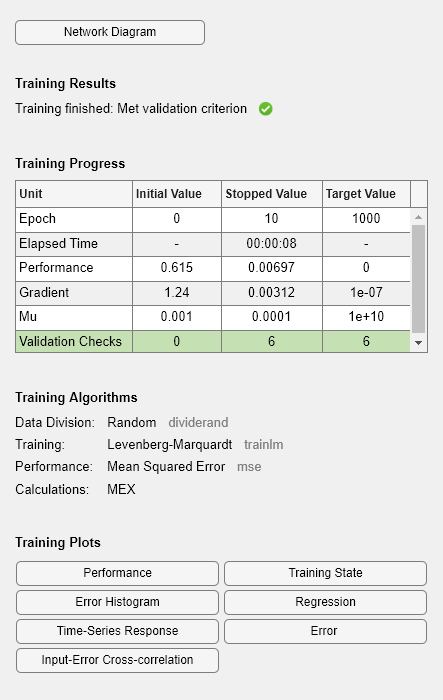

训练Narx网络。这trainfunction trains the network in an open loop (series-parallel architecture), including the validation and testing steps.

Display the trained network.

Calculate the network outputY, final input statesXf, and final layer statesAfof the open-loop network from the network inputXs, initial input statesXi和初始层状态Ai.

计算网络性能。

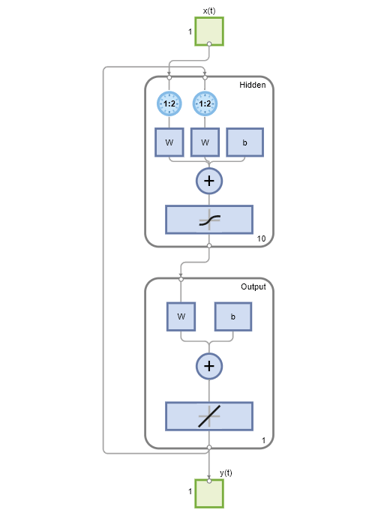

为了预测接下来的20个时间步长的输出,请先在闭环模式下模拟网络。最终输入状态Xf和层状态Af开环网络网络成为初始输入状态xic和层状态Aicof the closed-loop networknetc.

Display the closed-loop network.

Run the prediction for 20 time steps ahead in closed-loop mode.

Yc=1×20单元格数组列1至5 {[-0.0156]} {[0.1133]} {[-0.1472]} {[-0.0706]} {[-0.0706]} {[0.0355]}列6至10 {[-0.2829]}0.3809]}} {[-0.2836]} {[[0.1886]}列11至15 {[-0.1813]} {[0.1373]} {[0.1373]} {[0.2189]}-0.0156]}} {[0.0724]} {[0.3395]} {[0.1940]} {[0.0757]}